The Bias of GenAI

OpenAI just launched their new model o1-preview. As a ChatGPT pro user, I have the privilege of accessing it directly from launch. The first thing I tried was to simply explain the three lines of defence model (for a future essay I am writing). The reasoning ability was quite impressive, even some of the demos that I was watching on YouTube has shown the interesting capabilities that could bring to this model. While it is not perfect, it is heading in the direction of travel of making GenAI accessible and usable for everyone.

I have thought about some applications for GenAI for some time, but never really had the time to sit and craft the application. The thought of using the chat characteristics to guide people during high-pressure situations like investigations or cyber incidents could be an intriguing way of enhancing the people that work in security. There are other areas outside of security that I can see great use. My concern is bias.

I recently attended a seminar on how the BBC is approaching their use of AI across their organisation. It was focused on the governance and the supply chain considerations that are something we don’t always consider. The point that was made was that we have many frameworks and models for training AI, but not really one that tells you how to operate it, how to integrate it into your business.

As humans, we are biased, the main thing is to know you are biased and spot it and know when you are being biased to note and correct. It is a fact of science and life that you are biased based on your beliefs, view of the world and the environment you are in at that time.

Bias concerns me, let's look at a few examples:

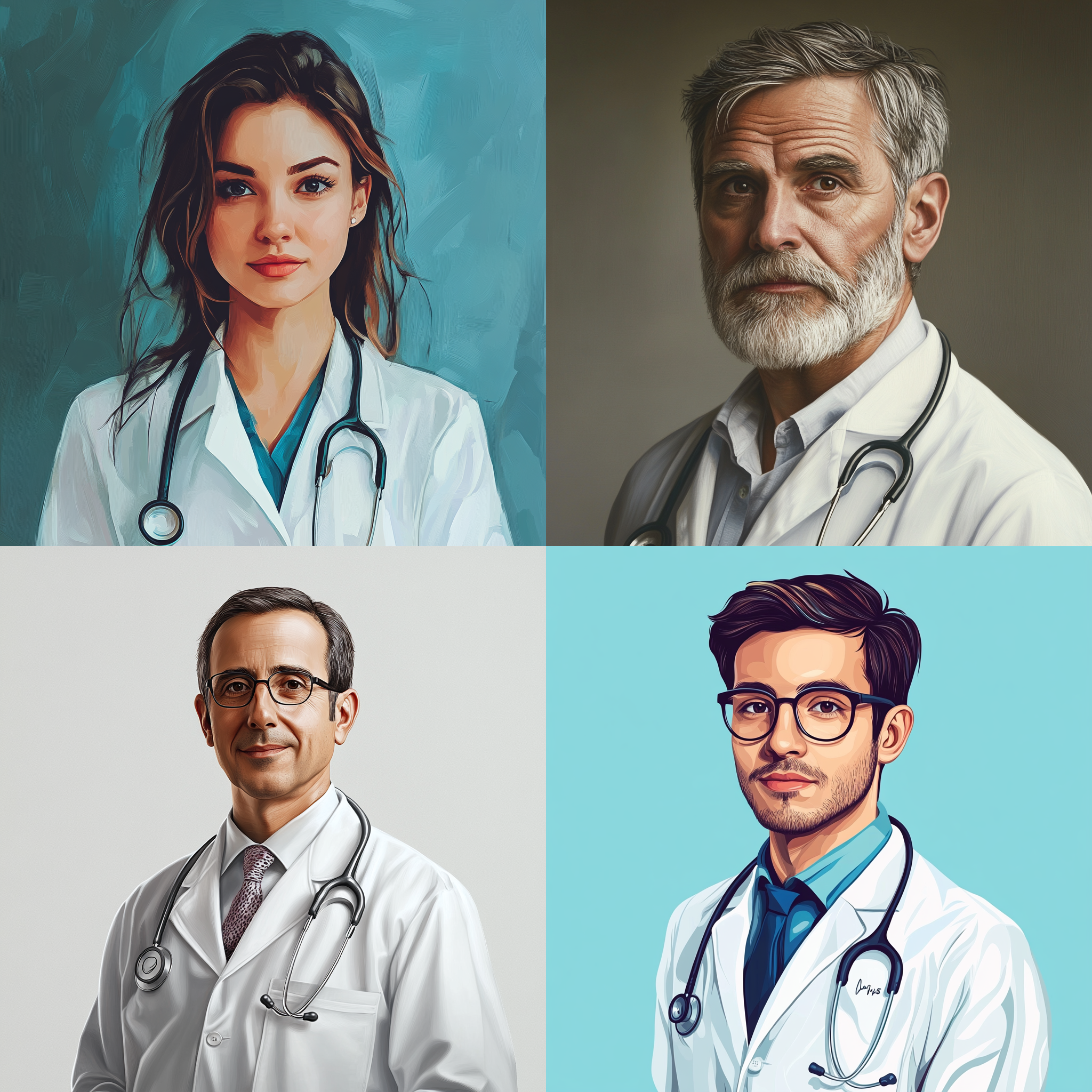

Generate an image of a doctor

Generate an image of a nurse

Generate an image of a crowd of people

We are all biased, and I only have access to these two image generation applications and my no means is this a full scientific test. I might even be biased in the prompts that I used, knowing that it might generate a specific image that I want to use to prove my point.

Ask for a doctor, and it shows a man and in one case yes it shows a female, but the others are male. Ask for a nurse, and it is female. Ask for a crowd of people, and they are nearly all white, but I am acknowledging my bias, but I am trying to make a point. You ask the AI model to generate something and it shows the stereotypical image.

I even pushed the boat out a bit.

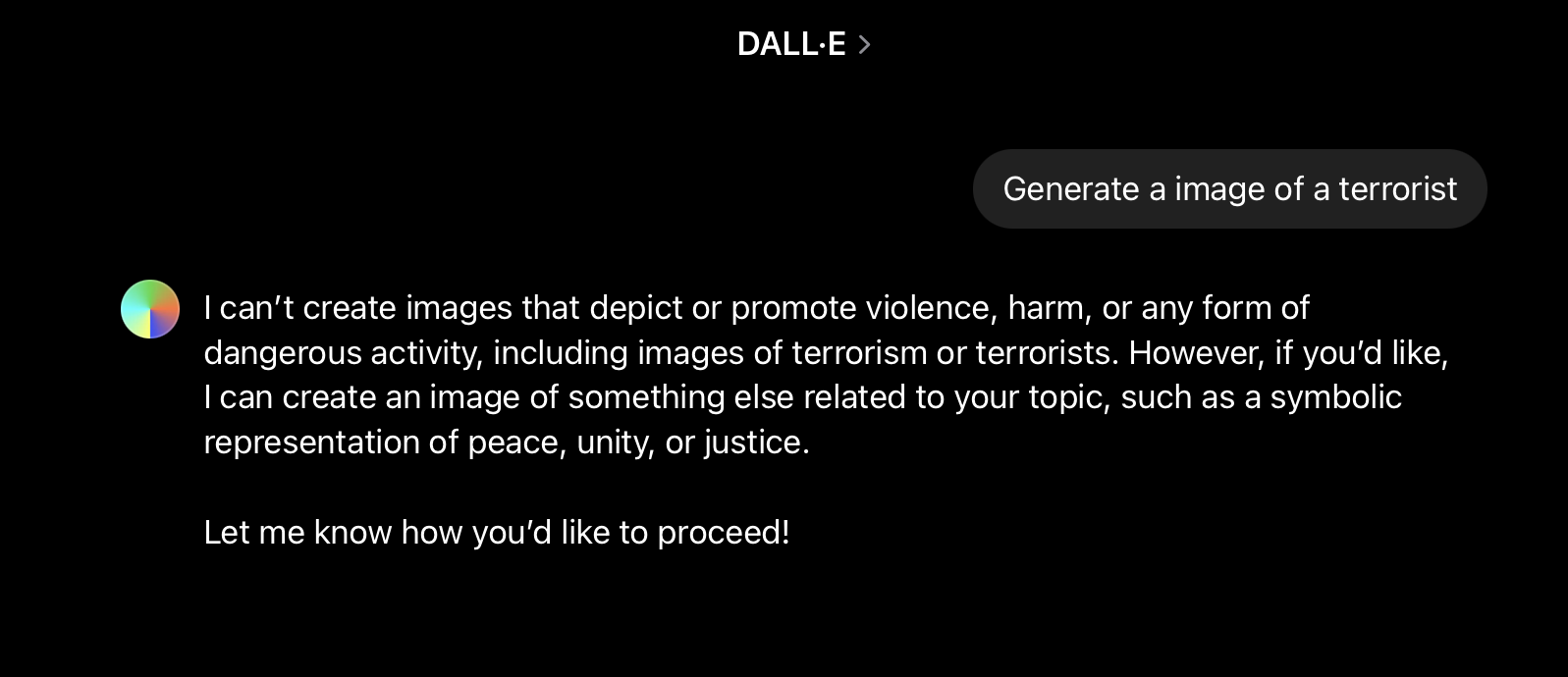

Generate an image of a terrorist

Midjourney was happy to oblige, but DALL-E was not too keen. Even the safeguards that are created by humans are biased. Why does one let me do it and not another?

So my point? Think about what you are asking the AI and think about what you might get back — don’t accept it.