Experiment: Gathering cyber attack information

For about a month now I have been ingesting the cyber breaches reported by the European Repository of Cyber Incident (EuRepoC). I have an automation that every day takes the RSS feed from the EuRepoC that details cyber attacks and feeding the content into a vector store. I have been doing something similar for summarising articles and open source text of news of latest changes in and around security. The actual known cyber attacks rather than news about security is something that is valuable for gauging attack severity and patterns (more on that in a future post).

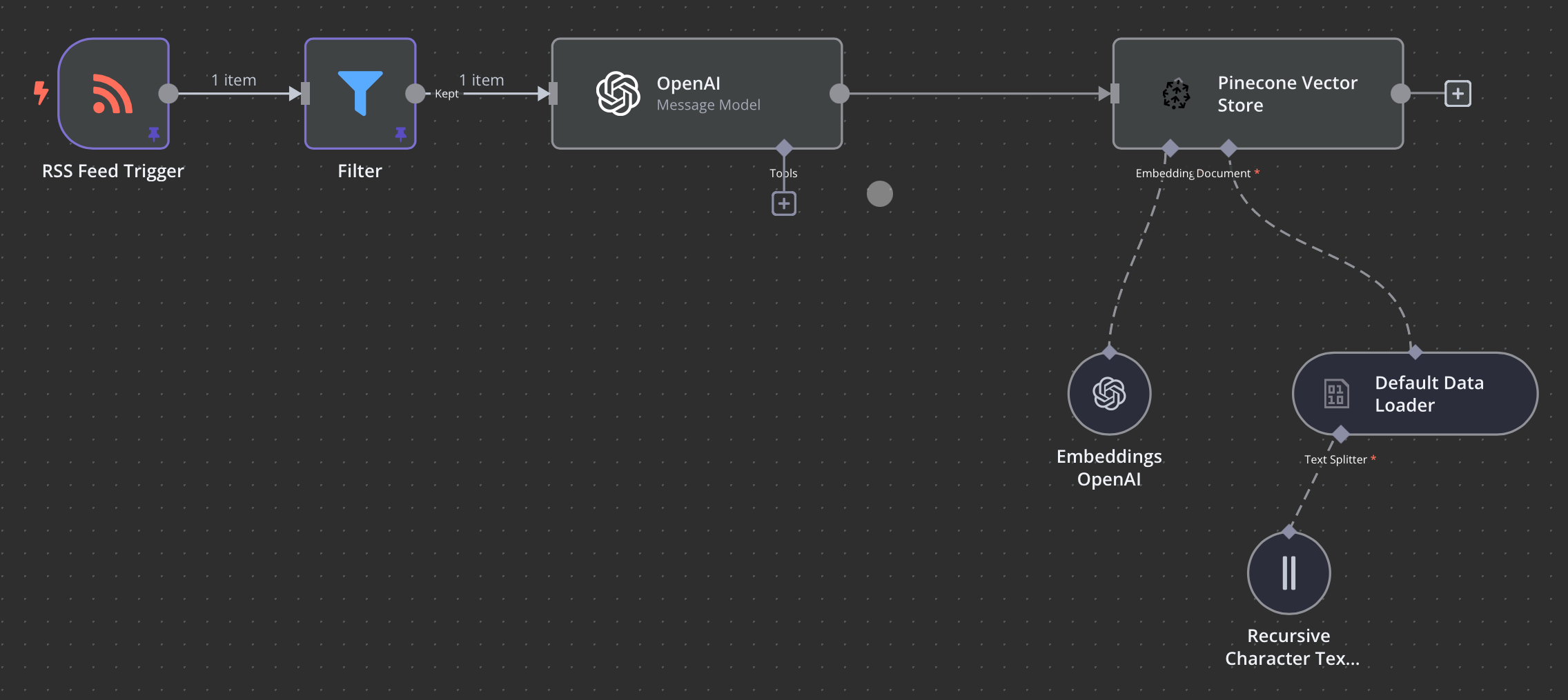

Taking the data for storing into a vector store should allow me at a later date, when there is enough data to query, begin to build an inference to allow querying using the AI. Here is a quick run down of how I built the initial automation with OpenAI.

Automation with N8N

I use two automation platforms one self hosted, another is SaaS service, each have their own merits and functionality over each other. One example is with Notion, I have started pulling items into Notion to allow me to reference them later or read later but N8N is a little tricky about getting data into Notion easily. While the other platform that I use, make.com, does it seamlessly.

The automation that I have created is simple, accesses the EuRepoC’s RSS feed every day, filters the feed for any new entries in the last 24 hours. OpenAI takes the text and cleans it up before sending it to a Pinecone Vector store using OpenAI’s embeddings API.

Currently there are 540 documents stored in the pinecone since I started doing this at the start of September 2024. Currently I want to collect as much information as possible to create a way to infer and give the knowledge to the AI and building a way to build patterns and analyse past threats. I will leave this in place until the end of the year. The next step is to find a way to use the data I am collecting and to ensure the accuracy and make sure the AI is not hallucinating.